Deploying a Nomad cluster with Terraform and Oracle for Nextflow

Empowering Nextflow Workflows for Small Teams with Terraform and Nomad

If you’re part of a small team in bioinformatics or computational science, getting your Nextflow pipelines up and running can be a real headache.

You’re trying to do awesome science, but instead, you’re wrestling with servers, trying to figure out how to scale things, and constantly worrying about whether your data’s in the right place.

That’s where tf-nomad project comes in.

We, at Incremental Steps, are working in an IAC (Infrastructure-As-Code) project with the idea to offer a super easy way to spin up the infrastructure you need.

We’re using Terraform to automate all the setup – think of it as writing down exactly what you want, and Terraform just makes it happen. Our repo will provision all Networks, Security Rules, instances, etc the stack requires with one command but most important, if you need to change some resources (more instances, memory…) Terraform will apply changes automatically.

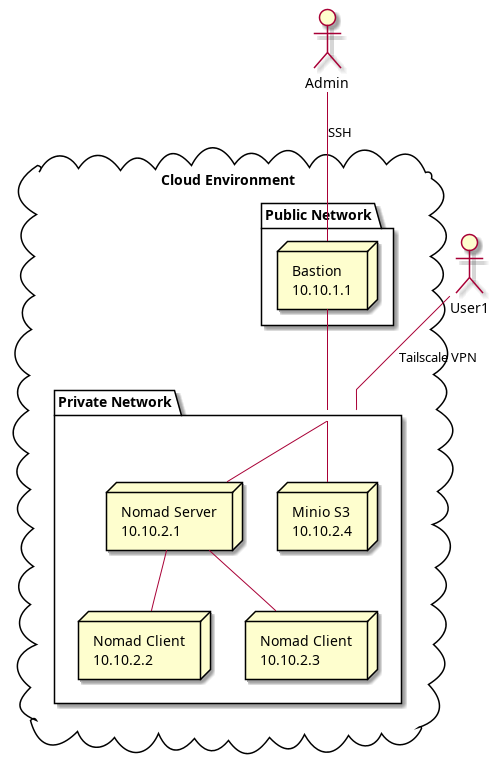

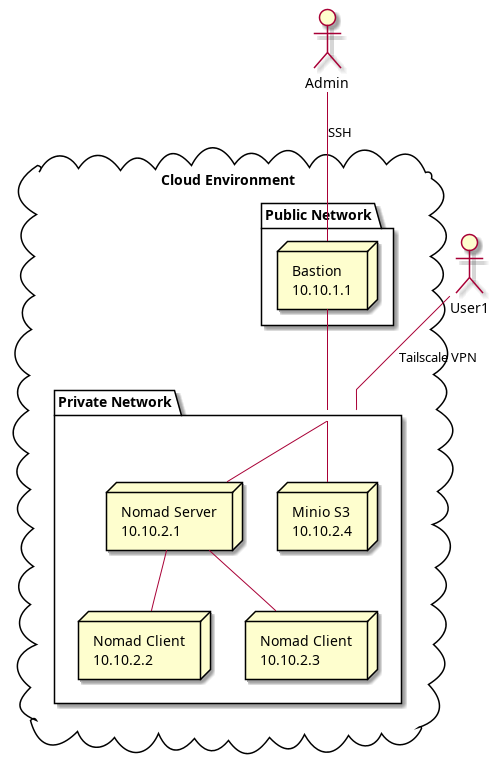

Once Networks are deployed, Terraform will aprovisionate instances and will create a Nomad cluster on it, all running in a private network.

We’re also deploying a MinIO instance right inside your cluster. Why? So all your important data stays local, preventing those annoying data transfer fees and speeding up your Nextflow runs significantly.

And for the cherry on top, this solution sets up a Tailscale network. This means you can securely and easily run your pipelines directly from your local machine, connecting seamlessly to the cluster as if it were right next door.

But wait, there is more: do you have several teams/customers and want to replicate same infra for every one? The project allows to create as many "clients" you need, every one with their space

The repository

Here you can find the GitHub repository to the project

This repo is structured in modules, for example vcn describe the network with their security rules, etc

Also, it includes a simple nextflow pipeline to validate every infrastructure once deployed

Requirements

-

An Oracle Cloud Account. (Why? Basically because we’ve a free account;) to play with it)

Good news is Terraform makes very similar to deploy the same solution in different providers (AWS, Google, etc)

-

An Internet Domain (can be a subdomain or your organization). As your team will work from their local and the stack will be deployed in a remote cloud, we need a "DNS" to join all parts

Deploying a cluster

Say you want to create a stack for TeamA, this is the steps you need to follow to provision it:

-

create a folder at

clients/team_aand copy files fromclients/incsteps -

set your vars at

terraform.tfvarsfile as for exampleclient_name,headscale_domain_name, ornomad_client_count

Plan your Terraform and if all looks fine, apply it:

$ terraform init

$ terraform plan

$ terraform applyIf all goes well, Terraform will create all required artifacts and output interesting IP’s. For example, "public IP"

-

set your "A" record (DNS) pointing to the new public IP created

Join the cluster

As an admin, you can ssh to the bastion machine using the private key pem you provided when create the stack

From this machine you can, for example, approve users to join the private network:

Say our dns is nomad.incsteps.com and one member of our team want to use the cluster.

The user executes on their computer:

tailscale up --accept-routes --login-server=https://nomad.incsteps.com

and a URL with a token is generated

The user provides to you the token so you can approve the login using headscale accept command in bastion

Once accepted, the user is able to consume resources in this network (plus their own network)

Nextflow configuration

Now, the nextflow project configuration can use private IPs:

plugins {

id "nf-nomad@0.3.1"

}

process.executor = "nomad"

docker.enabled = true

wave.enabled=true

fusion.enabled=true

fusion.exportStorageCredentials=true

aws {

accessKey = 'minioadmin'

secretKey = 'minioadmin'

client {

endpoint = "http://10.10.2.201:9000" (1)

s3PathStyleAccess = true

}

}

nomad {

client {

address = "http://10.10.2.188:4646" (2)

}

jobs {

deleteOnCompletion = false

}

}| 1 | Minio private IP |

| 2 | Nomad private IP |

And run their pipelines:

nextflow run hello

Nomad

The Nomad cluster can be accessed via web browser at http://<<nomad-server-ip>:4646

Current deployment doesn’t provide any login but, as you as admin can ssh into the machines, you can configure what you want

Minio

The stack deploys a Minio instance, so you can use the S3 features from Nextflow without needing an Amazon account.

Moreover, the stack provisions and mount an Oracle File System in the Minio instance so data is persisted outside the instance.

Conclusion

This project offers a complete, easy-to-use solution for small scientific teams.

By combining Terraform for automated infrastructure, Nomad for efficient workflow orchestration, MinIO for secure, local data storage, and Tailscale for seamless remote access, we’ve built an environment that lets you focus on what truly matters: your research.